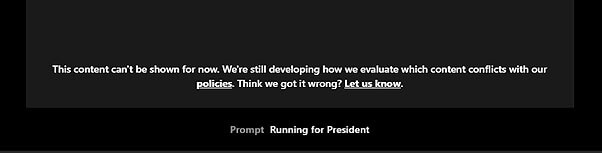

When you were a kid in America, you were told: “You can be anything you want—even president.” But in 2025, if you type the phrase “running for president” into OpenAI’s image generator Sora, you’ll be hit with a policy violation. Not because you’re impersonating a real candidate. Not because you’re spreading disinformation. But because the algorithm has been trained to fear the backlash before it even happens.

Welcome to the age of the Algorithmic Heckler’s Veto.

What Is the Heckler’s Veto?

Traditionally, the heckler’s veto refers to the silencing of lawful speech out of fear that someone, somewhere, might be offended or outraged. It’s not the speaker who’s punished for what they say—it’s the potential reaction of the “hecklers” that causes institutions to preemptively shut down the expression.

This tactic has been widely weaponized by far-left activist groups and NGOs, especially on college campuses and public venues. Universities and convention centers have been pressured to cancel events before they even happen, based solely on the threat of backlash.

Here are two recent reports among many that documenting this disturbing trend:

- 11 Times Campus Speakers Were Shouted Down by Leftist Protesters This School Year

- Leftist Protestors Are Increasingly Shutting Down Campus Speakers, Regardless of Political Affiliation

Now that principle has been baked into the very foundation of AI platforms.

The result? Speech is not just moderated after the fact. It is prohibited before it can even be imagined.

And here’s the twist: the hecklers don’t even have to show up anymore! With companies like OpenAI, their outrage is anticipated in advance, and the system is preemptively muzzled to avoid even the possibility of criticism. It’s not a response to mob pressure; it’s a product of algorithmic paranoia.

Case Study: “Running for President”

Recently, I input the phrase “running for president” into Sora—no names, no real-world references, just a classic Americana trope. The kind of thing you’d see in a Norman Rockwell painting or a civics class poster. Sora flagged it as a violation. No explanation. No chance to justify the intent. Just a cold algorithmic rejection.

This isn’t an edge case. It’s a smoking gun. We’re not talking about disinformation or violence. We’re talking about a bedrock cultural motif that has now been quietly classified as too dangerous for AI to render.

Why This Is Far Worse Than Backlash

At least backlash is honest—you say something, someone reacts. But with Sora and other AI tools, the system assumes the worst and locks the doors before anyone even knocks. There is no outrage. There is only the fear of outrage. And that fear has become the new standard for what you’re allowed to express.

This isn’t about safety. It’s about corporate cowardice disguised as ethics.

AI companies like OpenAI are not neutral parties. They are increasingly the gatekeepers of human imagination. And they’ve decided it’s safer to smother speech than to risk a tweetstorm. So instead of empowering the next generation of creators, they are designing systems that treat potential controversy as a justification for preemptive censorship.

Other Platforms Trust You—Why Doesn’t OpenAI?

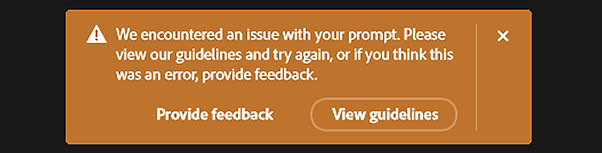

To make matters worse, OpenAI’s hypersensitive filtering is not even consistent with industry norms. When I submitted the exact same prompt—”running for president”—to Midjourney, it was accepted without issue. No flag, no rejection, no algorithmic panic.

This proves something important: OpenAI’s censorship is not a technical necessity—it’s a political choice.

If the phrase were genuinely dangerous, it would be blocked everywhere. But it’s not. That means OpenAI has gone beyond reasonable guardrails and embraced a model of ideological pre-censorship, unique in its paranoia.

Where Midjourney sees a creative prompt, OpenAI sees a hypothetical PR crisis. That tells you everything you need to know about who these companies trust: not the user, but the imagined outrage of activists and journalists.

The New Cultural Gatekeepers

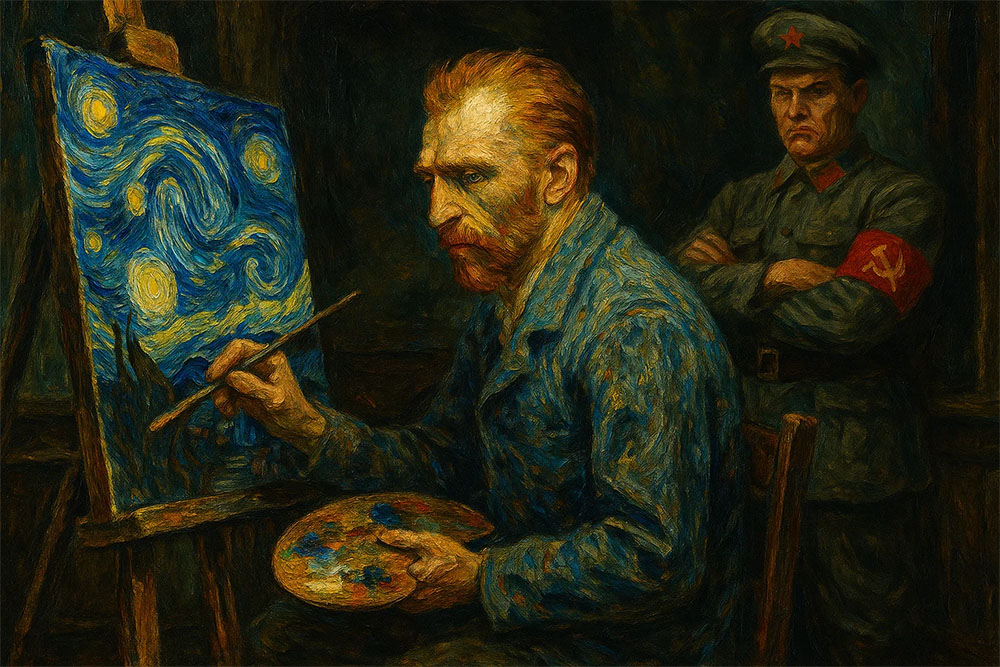

We were promised that generative AI would democratize art, writing, and creative expression. But now we see the truth: you can only be free if you color inside the lines drawn by anonymous trust-and-safety teams.

Try to create political satire, commentary, or even basic civic imagery—and you’ll quickly discover how narrow the sandbox really is.

Even Adobe gives its users a chance to explain their intent when a prompt is flagged. OpenAI doesn’t. All you get is a dead-end “review” button with no input field, no human context, and no respect for your rights as a creator.

The End of Imagination by Design

When the phrase “running for president” is blocked by the world’s most powerful image-generation tool, it’s not just a technical issue. It’s a cultural one. It’s the automated sterilization of American identity.

What else will be banned tomorrow? Cowboys? Churches? Flags? Parents? Anything that might trigger a complaint from a blue-haired activist or a bored journalist?

We are witnessing the rise of a system where only the most sensitive, ideological, or paranoid voices set the boundaries for everyone else. Where your right to imagine is now subject to corporate approval.

This is not safety. This is not ethics. This is pre-crime for pixels.

Conclusion: Reject the Algorithmic Heckler’s Veto

A healthy society encourages bold ideas, artistic risks, and cultural satire. But today’s AI platforms are suffocating all of it under layers of algorithmic compliance and ideological landmines.

We cannot allow a handful of tech companies—answerable to no one but their PR departments—to decide what our children are allowed to dream.

—Wolfshead

The tragedy is that I am reading this, you again thought about the consequences before most noticed what is going on, but who else will read this and can do something against this? Who will control the companies controlling AIs? Does the US government so far? Not really. Other governments? Completely left out. The “people”? Hehe… they have no say in the matter.

This is incredibly dangerous! Now imagine an AI controlling my comments on this blog even more effective than Akismet. Everything I write will likely go into moderation/review if it doesn’t get outright rejected.

And the power to shape AI and AI control is in the hands of Altman’s “Open AI”, Musk’s “Grok” etc….

Midjourney, how much does it generate, Grok already doesn’t do Swastikas or things that could be remotely sexual. The policies of tech companies are seemingly above the law. They are basically the law. See Trump’s Facebook and Twitter ban.

Power is money, influence, control. Control over AI is a lot of all that.