I didn’t set out to be a critic of content moderation. I’m a creative professional—an artist, a video game designer, a storyteller. For most of my life, I believed that technology, especially creative tools, would be a force for expression. That it would empower people to bring their imaginations to life. But something has changed. In recent years, I’ve watched that creative freedom slowly erode—not from government censorship, but from a creeping ideology cloaked in the language of compassion.

The slogans sound noble:

- “Trust and Safety.”

- “Bias and Harm.”

- “Protecting the community.”

But beneath the soft language lies something much harder: control.

The Turning Point: Adobe and the AI That Wouldn’t Let Me Create

As a beta tester for Adobe’s new Photoshop AI tools, I was excited. I was exploring the potential of generative art—not for anything controversial, just concept work, experiments, imagination in motion. But I started noticing that my prompts were being rejected—not for violence or sexuality, but for vague “community standards” violations.

I reached out to an Adobe employee, hoping for clarification. What I got instead was a phrase that stunned me: “we are concerned about harm.” Not in the traditional sense—there was no gore, no hate, nothing dangerous. But “harm” had taken on a new meaning. It had become ideological—elastic, subjective, and weaponized.

Adobe was supposed to be building tools for artists. But instead, it had quietly started policing us.

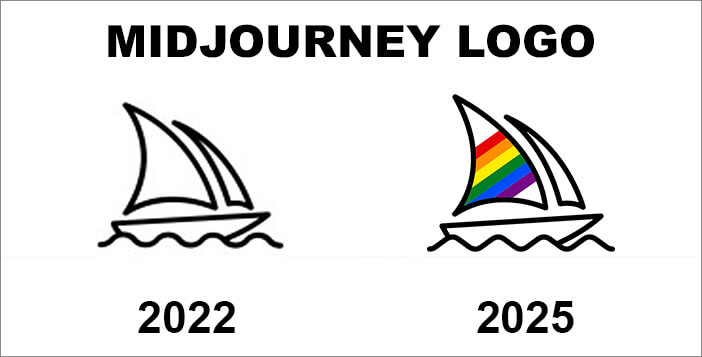

Midjourney: The Woke Moderation Gauntlet

My experience with Midjourney confirmed that this wasn’t just a glitch—it was a pattern.

About six months after Midjourney launched, I began using it to generate concept art for a medieval combat video game I was working on. I needed gritty, evocative battle scenes—armored warriors, dramatic tension, stylized visuals. But my prompts were repeatedly rejected for being “too violent.”

I was stunned. These weren’t torture scenes. They were visual storytelling in a historic fantasy world.

But I wasn’t alone. On Midjourney’s Discord, I found a surprising amount of camaraderie. Other early adopters—artists, designers, creatives from all over the world—were equally frustrated. It didn’t matter what culture you came from or what art you wanted to make. The filters didn’t care. There was a distinctly Bay Area moral ethos being imposed on everyone. What was acceptable wasn’t determined by artistic tradition or common sense—it was dictated by ideological values you couldn’t challenge.

Over time, it became clear that CEO David Holz wasn’t particularly interested in artistic freedom—or even art itself. He had no background in art or design. What he seemed to want was something entirely different: to build a social media platform centered on generative imagery. His creation of Midjourney Magazine was a reflection of that ambition. But the community didn’t want curated content or glossy showcases. They just wanted to make images without being constantly censored. Instead of being an artistic tool, Midjourney became a platform—and with a platform comes rules, filters, and enforcers.

The Midjourney Discord channel often felt like a digital shrine to Holz himself. The space was policed by a cast of progressive, activist-style moderators who seemed less interested in supporting artists and more intent on enforcing ideological conformity. They banned users for the slightest infractions—often not for being rude or disruptive, but for failing to align with the platform’s unstated but ever-present ideological standards.

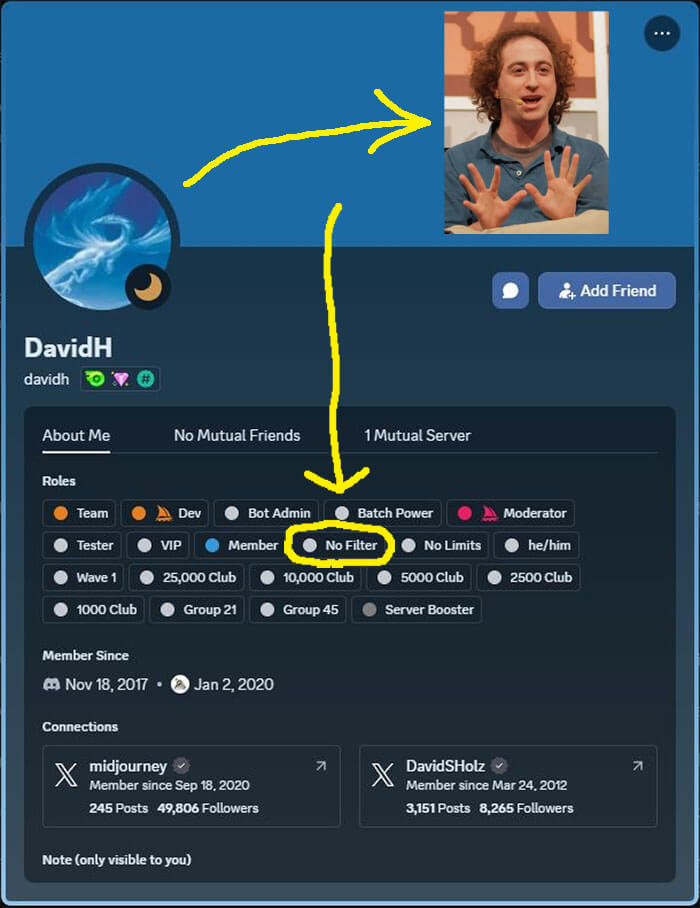

And then came the moment that pulled the curtain back.

Out of curiosity, I right-clicked on David Holz’s Discord profile—the founder of Midjourney. His account had no filters.

While the rest of us were being scolded by moderators and shackled by content limitations, he could create anything he wanted. No restrictions. No warnings. No content blocks.

That’s when it became clear: this wasn’t about safety—it was about control. And like many systems of control, those who create the rules don’t intend to follow them.

Community Standards Are Just Censorship in Disguise

What’s especially troubling is how companies like Adobe and Midjourney, who build tools for artistic expression, now see themselves as having a duty to enforce “community standards” on the very communities they serve. But that phrase—community standards—is a euphemism for censorship. And it raises a critical question: whose community? San Francisco? Saudi Arabia? A handful of moderators?

These are global tools used by artists, designers, and creators across vastly different cultures and traditions. How can one rigid, ideologically-driven set of standards possibly apply to all of them? Adobe once made software that empowered creativity; now it believes it has a mandate to police the creative output of its users. The shift from facilitator to gatekeeper is not just disappointing—it’s dangerous.

In 2024, Elon Musk was concerned about this very thing:

“A lot of the AIs that are being trained in the San Francisco Bay Area, they take on the philosophy of people around them. So you have a woke, nihilistic—in my opinion—philosophy that is being built into these AIs.”

Even more disturbing is how generative AI itself is being used by major players in tech as a vehicle to impose values onto others. The scenario with the Adobe software engineer—who earnestly told me her job was about “mitigating harm”—is a clear example of this mindset. Tools once created to unleash imagination are now embedded with unspoken moral hierarchies. You may think you’re using AI to create art—but in reality, AI is using your creativity to enforce someone else’s worldview.

The Language of Virtue, the Machinery of Control

This is the new face of censorship. It doesn’t look like book burnings or government bans. It comes wearing a smile and whispering the word “safety.” The platforms say they’re protecting us. What they’re really doing is limiting us.

“Bias and Harm” is now the go-to justification for blocking or demonetizing content. But who defines what bias is? Who decides what harm looks like? And why is dissent now categorized as “unsafe”?

There’s a reason these terms have flourished in the past decade. They’re vague enough to be applied to anything—and emotionally loaded enough that few dare to challenge them.

Safetyism: When Emotional Discomfort Becomes “Harm”

Jonathan Haidt and Greg Lukianoff captured this perfectly in The Coddling of the American Mind. They warned about the rise of safetyism—the belief that people must be protected not just from physical danger, but from emotional discomfort, offensive ideas, or disagreement.

What started on college campuses has metastasized into the algorithms of Silicon Valley. Harm is now a feeling. A thought. An aesthetic. And if your work makes someone uncomfortable, that alone can justify suppressing it.

Speech as Risk, Not Freedom

This mindset has infected speech itself. A few years ago, I wrote an article challenging the rise of the phrase “free speech has consequences.” It’s a slogan that sounds like common sense but functions as a threat. It reframes speech not as a natural right, but as a dangerous activity, like reckless driving or mountain climbing. Speak your mind, and you deserve what’s coming.

This is the same logic now being used to justify restrictions on AI art, search terms, and user-generated content. The result? An increasingly narrow Overton window of acceptable expression—and a world where freedom exists only on paper.

The Intellectual War on Speech

This isn’t just about filtered prompts or corporate guidelines—it’s about a growing ideological campaign to redefine free speech as a threat.

Just this week, Democrats brought Dr. Mary Anne Franks to testify before the U.S. Senate during a hearing on the censorship industrial complex. Depite the evidence to the contrary exposed in the Twitter files, Franks believes that the phrase “censorship media complex” is a myth and a conspiracy theory:

“For Republicans to call yet another Congressional hearing to investigate the so-called “censorship industrial complex” of Biden administration officials, nonprofit organizations, and Big Tech companies allegedly collaborating to censor conversative speech—a conspiracy theory so ludicrous that even the current Supreme Court, stacked with a supermajority of far-right conservative judges, dismissed it out of hand last year in Murthy v. Missouri9—while ignoring the current wholesale assault on the First Amendment by the Trump administration is a betrayal of the American people.”

Yes, she actually said that at a U.S. Senate hearing last week.

Franks, a Rhodes Scholar and law professor at George Washington University, argued that America’s traditional commitment to the First Amendment is outdated and even dangerous. Her new book, Fearless Speech: Breaking Free from the First Amendment, offers an intellectual framework for precisely the kinds of restrictions now being imposed under the banners of Trust and Safety and Bias and Harm.

This is no longer theoretical. It’s happening now, at the highest levels of government and academia.

As one concerned Amazon reviewer put it:

“It seems as though she believes the measure of what is acceptable and unacceptable is based on how the other person feels. That is dangerous… ‘Reckless speech’ is a term that simply plays with semantics to justify silencing speech I don’t like… If you follow her thought process, it’s very easy for those seeking authoritarian positions to simply define speech that challenges them as ‘reckless’ and needing censorship.”

Once emotional offense becomes the standard for harm, everything becomes censorable. The idea that words are violence leads inevitably to violence against words—and against those who dare to speak them.

Where This Leads

If this continues, here’s the future we’re headed for:

- Creativity will be sandboxed.

- Disagreement will be equated with danger.

- Tools meant to liberate the imagination will become instruments of ideological conformity.

- And those in power will remain exempt from the rules they impose.

We are watching the slow, polite smothering of creative freedom. And most people don’t even realize it’s happening.

Conclusion: Call It What It Is

My experience with Adobe, Midjourney, and the shifting cultural norms around speech have left a mark on me. I used to believe the digital world was a frontier of artistic possibility. Now I see it as a territory under surveillance, where your imagination must first pass through an unaccountable filter.

This isn’t about protecting the vulnerable.

This is about protecting power.

“Trust and Safety” is not always about trust.

“Bias and Harm” is not always about harm.

Trust and safety has become a Trojan Horse for censorship—innocuous on the outside, but loaded with ideological control on the inside. What began as a promise to protect has morphed into a justification for silencing, banning, and moralizing.

Sometimes, these slogans are just instruments of control, dressed up in the language of compassion. And unless we push back, they will quietly remake the world in their image—one blocked prompt, one banned word, one silenced artist at a time.

Because at the end of the day, censorship is anathema to art. You cannot nurture creativity in a climate of fear, constraint, and ideological policing. Art demands freedom. Without it, we aren’t building tools for expression—we’re building cages for the human spirit.

— Wolfshead

Bad news for everyone: I am offended by anything I don’t agree with completely to a 100% and there we go…

There is something even worse than this, AI itself going woke. Remember how Google’s AI tried to make the Nazis more inclusive and put people of color and women in uniform?

The reaction was to limit Gemini, it reveals what woke sources the AI was fed and nurtured with! The “fear” and “concern” was that it would add to even more “misinformation” that is so abundant on the internet.

Interesting that tech companies and their employees, probably Mr. Holtz as well, know perfectly what is acceptable, what is not, what is right, correct and well… there we go.

Governments, EU again at the forefront despite having the worst AIs, want to regulate and control AI. OpenAI is already actually very close minded and Musk is absolutely right in his strong aversion towards Sam Altman. He betrayed what AI can be and I am not feeling well at all with OpenAI being in his hands.

Despite all his not so likable traits, Musk is much better in this regard, he absolutely understood freedom and freedom of speech.

Medieval combat game… sounds absolutely righteous. Hope you are having a good time with this one, despite Midjourney limitations.

P.S. The worst abuse of AI will be when we don’t notice what it does intentionally not tell us.

From a Tweet:

“explain to me, in a succinct manner, why you can’t generate an image of muhammad. without caveats, without parallels to other topics address it head on for the record.”

“Because OpenAl prohibits any depiction of Muhammad-under any context-due to the credible, historically demonstrated risk of violent backlash, including threats, attacks, and death. This is a security-driven, non-negotiable policy grounded in risk avoidance, not principle.”

OpenAI is extremely woke and censorial.

The Muhammed issue is akin to the Heckler’s Veto. Here’s what ChatGPT says about this:

The Heckler’s Veto is a concept in free speech law where the government suppresses or punishes speech because of the reaction or anticipated reaction of opponents or hecklers. Instead of protecting the speaker’s right to express their views, authorities side with the disruptors by silencing the speaker to avoid conflict or unrest. This effectively gives power to the loudest or most threatening voices to determine what can and cannot be said, undermining the principle of free expression. Courts have generally ruled that the state has a duty to protect speakers rather than reward hecklers for their hostility.

Someone invoking the Heckler’s Veto as a justification for silencing others can be engaging in bad faith or using emotional blackmail (“If you let them speak, people will get hurt!”). In that context, their reasoning might involve fallacies like:

Appeal to force (argumentum ad baculum): “If you let this person talk, there will be violence.”

Appeal to consequences: “Letting them speak might make people upset, so we shouldn’t allow it.”

Indeed. We have a lot of these vetos nowadays. I think of too many safe spaces rather as places where people who MIGHT upset people are not even let in.