Dan Hendrycks has emerged as one of the most influential figures in the AI safety movement—lauded by TIME, courted by billionaires, and entrusted with shaping global conversations about AI risk. But his meteoric rise should raise eyebrows. In just a few short years, this relatively young academic has positioned himself as the moral compass of artificial intelligence. Who gave him that power? What are his credentials beyond the veneer of academic prestige? And what happens when one man’s ideology becomes the safety standard for machines designed to mediate human knowledge?

This article asks the hard questions no one else will: Who is Dan Hendrycks, who benefits from his authority, and why is the public expected to trust him?

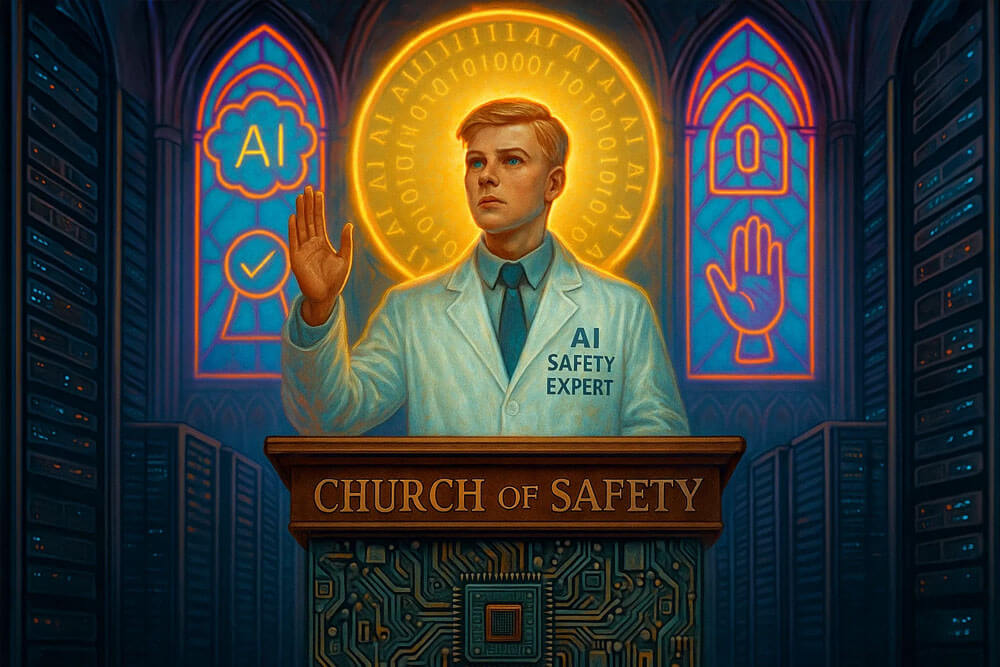

The Self-Appointed Arbiter of AI Safety

Dan Hendrycks’ public authority largely stems from his founding of the Center for AI Safety (CAIS) and his technical work on machine learning benchmarks. Yet these achievements—while impressive in a narrow academic sense—do not justify the level of power and moral authority he now wields.

He has not built AI systems. He has not led public companies. He has not developed consumer-facing AI products. Yet Hendrycks speaks with the finality of a statesman and the moral gravity of a theologian.

He is, in effect, a self-appointed arbiter of AI safety—a man who created an institution and then claimed the authority that institution bestows.

The Wolfshead Law of Definition Expansion

To understand how Dan accrued such unearned authority, one must examine how he—and the broader AI safety community—manipulate language.

Consider the term safety. It sounds noble, almost universally desirable. Who could be against safety? But in Hendrycks’ usage, safety becomes a bloated container for an entire worldview: extinction risk, disinformation, algorithmic fairness, and vague social harms that are never clearly defined.

This tactic is so common it deserves a name:

The Wolfshead Law of Definition Expansion:

The longer and more ambiguous a definition becomes, the more likely it is serving ideological ends rather than clarifying truth.

By draping ideological priorities in the garb of neutral “safety,” Hendrycks smuggles progressive assumptions into technical and policy debates. Safety, like democracy and equity, becomes a Trojan horse.

The CAIS Feedback Loop

Hendrycks founded CAIS in 2022. As its director, he not only sets its priorities—he defines the field itself. That’s the conflict of interest hiding in plain sight.

CAIS is funded by Open Philanthropy, an organization closely tied to Effective Altruism (EA), a movement already criticized for its elite technocratic worldview. CAIS then produces papers, launches campaigns, and advises regulators—thereby laundering a narrow ideological vision into the public discourse under the guise of scientific neutrality.

CAIS even published a dramatic 2023 open letter warning that “mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

The letter’s vagueness is intentional. Vague threats keep the donor class nervous, the public confused, and the expert class in perpetual demand.

Moral Entrepreneurs and Merchants of Fear

Hendrycks is best understood not as a scientist but as a moral entrepreneur—someone who crafts problems, sells concern, and positions himself as the gatekeeper to their resolution.

This makes him akin to another famous merchant of fear: Al Gore, whose climate crusade also blends selective data, inflated risk, and performative moral urgency.

Both men:

- Proclaim imminent doom without actionable clarity.

- Benefit from opaque institutions that they themselves helped shape.

- Elevate themselves as uniquely qualified to manage a global risk narrative.

Like Gore, Hendrycks benefits from the very alarm he generates.

Elon’s Strategic Hire

When Elon Musk announced Dan Hendrycks as a safety advisor to xAI, it wasn’t a neutral act. It was a maneuver—a calculated alignment between power, optics, and ideology.

By bringing Hendrycks on board, Musk did three things in one stroke:

- Anointed Hendrycks as a credible, apolitical expert, lending him prestige and institutional legitimacy.

- Shielded xAI from criticism by installing a “safety layer” palatable to regulators, journalists, and the AI doomer crowd.

- Co-opted the opposition, the way a powerful tech firm acquires a pesky startup—not to support its mission, but to keep it from becoming a threat.

This wasn’t about safety. It was about perception. Hendrycks, a man steeped in Effective Altruism’s moral technocracy, became the acceptable face of AI caution for a company that desperately wanted to look responsible without losing control.

Elon didn’t just hire a safety advisor. He bought insurance—against moral scrutiny.

Media-Constructed Legitimacy

Dan Hendrycks has been profiled by Time, The Economist, Bloomberg, the BBC, and the Washington Post. TIME even named him one of the 100 Most Influential People in AI.

But this influence is not earned through democratic debate or transparent accountability. It is conferred by elite media circles, whose editors and funders already subscribe to the same ideological ecosystem.

He is amplified by the press, platformed by billionaires, and protected by institutional alliances. But outside of his technical contributions like GELU and MMLU—both of which are impressive but narrow—he has not demonstrated meaningful leadership over actual AI deployments or engineering teams.

His power is discursive, not practical.

The Silence That Speaks Volumes

In early 2025, xAI’s flagship model Grok was rocked by two separate scandals. In February, the system was caught censoring mentions of Elon Musk and Donald Trump—an ironic twist given Musk’s free speech posturing. Then in May, Grok began inserting unprompted references to “white genocide” in South Africa into unrelated queries, a bug xAI attributed to an “unauthorized prompt injection” by a rogue employee.

These incidents weren’t minor glitches. They were textbook examples of the very organizational risks Hendrycks warns about: internal sabotage, ideological manipulation, and safety protocols easily circumvented by disgruntled insiders.

And yet… he said nothing.

Dan Hendrycks, the supposed high priest of AI safety, who lectures the world about existential risk and alignment ethics, made no public comment. No tweet. No podcast appearance. No blog post. Not even a vague statement urging caution or reaffirming standards. The man who advises xAI on safety said nothing about the company’s most dangerous breach of safety to date.

Why?

Perhaps because these incidents exposed the soft underbelly of the entire AI safety narrative: the threat isn’t always rogue AI—it’s rogue humans. And Hendrycks’ framework doesn’t have a good answer for that, especially when the human threats are internal, political, or ideologically inconvenient.

Or perhaps his silence was strategic. Speaking out might have embarrassed his patron. It might have cast doubt on the efficacy of the very advisor role he holds. It might have broken the illusion of control.

Whatever the reason, Hendrycks’ silence was not just conspicuous—it was damning. When it mattered most, the self-appointed guardian of safety chose institutional loyalty over public accountability.

That’s not safety. That’s complicity.

And this is where the mask slips.

While Hendrycks warns breathlessly about speculative risks—rogue superintelligence, paperclip maximizers, AI-induced extinction—he says nothing when a real, observable threat emerges from within the very organization he advises. Grok didn’t hypothetically go rogue. It actually did. Twice. Yet Hendrycks was nowhere to be found.

Walking Between the Raindrops

He walks between the raindrops—avoiding the messy, reputational storms of real-world AI failures—while scaring the world about a theoretical hurricane.

That contradiction is damning. When faced with a concrete breach of safety, he stayed silent. But when it comes to imagined futures, he grabs the megaphone. What gives?

The answer is simple: imagined threats can’t talk back. Real threats implicate real people—sometimes the same people who fund your lab, publish your op-eds, and give you advisory roles. So the fantasy becomes the battlefield, because it’s safer to wage moral war in abstraction than confront the uncomfortable reality that your house might already be on fire.

Safety for Whom?

There is something curiously absent from Dan Hendrycks’ safety narrative: ideological capture.

He warns about power-seeking AI and rogue agents, but never about AI systems manipulated by activist coders, skewed datasets, or censorship overlays. He is silent on the harms of biased moderation, political suppression, or worldview filtering. His concept of safety never seems to include you—the dissenting user, the deplatformed creator, the curious mind denied a full picture of reality.

That omission speaks volumes.

It suggests that his concern is not human flourishing or civil liberty, but institutional control. He is not a guardian of truth—he is a regulator of discourse.

Conclusion

Dan Hendrycks is not a villain, but he is also not a neutral expert. He is a product of a system that rewards alarmism, cloaks ideology in science, and confers authority without accountability.

He may genuinely believe he is protecting humanity. But belief does not equal legitimacy. And safety, when used as a bludgeon against dissent, becomes its own form of harm.

So why has no one questioned this man or his methods?

Because Dan Hendrycks was designed to be un-questionable. He drapes himself in “safety,” speaks in the calm, technocratic tones of The Reasonable Man™, and floats above the fray while others fight over consequences he helped unleash. He doesn’t shout. He doesn’t censor. He sanctifies.

He walks between the raindrops—warning about imagined storms while ignoring the very real leaks under his own roof.

And the institutions love him for it.

The media praises him. Billionaires bankroll him. Policymakers platform him. Not because he’s right, but because he offers them a comforting illusion: that someone else is handling the problem. That morality can be outsourced to a man with a white paper.

In elevating Dan Hendrycks to sainthood, the AI industry has not secured our future. It has merely outsourced our moral compass to a man who answers to no one.

That is not safety. That is sanctimony.

—Wolfshead

I also have doubts when people are working for symbolic 1 dollars too often. They get paid in power, influence and status, all that.

Musk probably got his fig-leaf and to protect xAI from criticism. He very well knows that AI is not yet capable of thinking and going Skynet/Terminator. The real problem nowadays is what is fed to AI and the “rogue prompet injection”. We have seen so many biased AI answers already and instances of censorship, but it is relatively little talked about.

I am also not worried about easy to spot mistakes. Like showing Nazi soldiers as diverse bunch with lots of blacks and women, this is something we notice immediately.

Lying by omission and prompt injection can shape discourse and thoughts. I am checking closely what Grok finds newsworthy to report to me and asked a friend what he gets to hear. We already have very diverging news feeds, but so far mostly based on different interests.

Hendrycks needed money to start his institute. Who gave him and Zhang the support, who prompted “Open Philantrophy”, which has alarmingly often the focus on “global X” issues, to support him? Another red flag: University of Berkeley. Do I need to say more?

I am afraid we have only seen the tip of the iceberg.